Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry content

Entry description

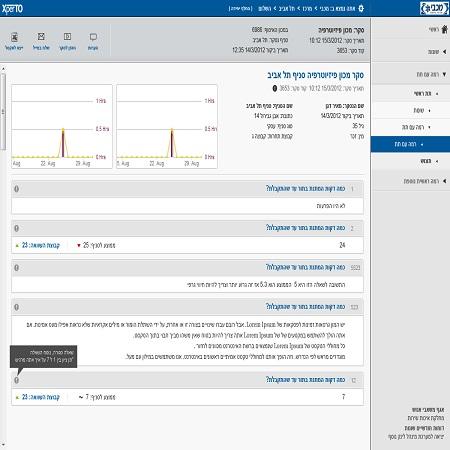

EnetPulse provides service that pushes data in XML format based on a foundation named

SpoCoSy - Sports Content System.

The basic mechanism is simple: the server where you want to get the data should expose a php file which can be accessed outside the server (this is in fact a WebService).

The address of this file is given to EnetPulse which "call" the WebService with the requested data.

XML file is then saved on the server with the data received and a row is added to the DB table of files to be processed.

The final step was using the "Parser" on these XML files, and insert their content to the DB.

In this project we were required to:

1. Perform the installation of the infrastructure which will run the system: Apache server, PHP engine and MySQL database.

2. Establish and run the SpoCoSy system: create the DB schema, and expose the WebService to EnetPulse.

3. Create a backup scheme (that is a copy of the main schema data at any time)

4. Durability tests of the system: in case of failure in Parser, abnormal files, or server maintenance.

5. Develop a mechanism to run the Parser and run optimally alternating mechanism.

6. Test the system under load.

7. Create and install a production environment.

After creating the environment and running the system we started developing the alternating mechanism.

Customer demanded that whenever data is updated in the database, some SQL code has to run that (performing some statistical calculations on the data and store the result in other tables).

Another condition was that while running the algorithm, no data is allowed to be updated (so that it will not work on partial data).

Because the rate of arrival of data from SpoCoSy is very high (tens of thousands of updates per day) it was necessary to schedule the execution of the algorithm in such way it will not harm the data processing by the Parser on one hand, and yet maintain the results of the calculation of the algorithm up to date, on the other.

To achieve this, we were decided to use a threshold value for the algorithm work.

As long as there are over 10 files for the Parser to process, it takes precedence.

Once there are less than 10 files, the algorithm will run.

Of course, during the algorithm running period, files continue to arrive, so when it is finished, there will be more than 10 files to process and the priority will go to the Parser again.

Files that the Parser finished processing are moved to the 'Parsed' folder and deleted from the list of files in the DB.

Similarly, we have developed a mechanism for damaged files – these are moved to an 'Error' folder and also deleted from the list of files in the DB.

The maintenance of an updated backup scheme was achieved by using triggers for CRUD operations.

For some of the table in the backup scheme we were asked to keep the entire history of data (in other words not to update or delete, but only insert data).

Since these tables had 'Primary Keys", there was concern that some insert queries will fail due to Primary Key Violation. The simple and obvious solution was to cancel the 'Primary Key' of these tables.

SpoCoSy - Sports Content System.

The basic mechanism is simple: the server where you want to get the data should expose a php file which can be accessed outside the server (this is in fact a WebService).

The address of this file is given to EnetPulse which "call" the WebService with the requested data.

XML file is then saved on the server with the data received and a row is added to the DB table of files to be processed.

The final step was using the "Parser" on these XML files, and insert their content to the DB.

In this project we were required to:

1. Perform the installation of the infrastructure which will run the system: Apache server, PHP engine and MySQL database.

2. Establish and run the SpoCoSy system: create the DB schema, and expose the WebService to EnetPulse.

3. Create a backup scheme (that is a copy of the main schema data at any time)

4. Durability tests of the system: in case of failure in Parser, abnormal files, or server maintenance.

5. Develop a mechanism to run the Parser and run optimally alternating mechanism.

6. Test the system under load.

7. Create and install a production environment.

After creating the environment and running the system we started developing the alternating mechanism.

Customer demanded that whenever data is updated in the database, some SQL code has to run that (performing some statistical calculations on the data and store the result in other tables).

Another condition was that while running the algorithm, no data is allowed to be updated (so that it will not work on partial data).

Because the rate of arrival of data from SpoCoSy is very high (tens of thousands of updates per day) it was necessary to schedule the execution of the algorithm in such way it will not harm the data processing by the Parser on one hand, and yet maintain the results of the calculation of the algorithm up to date, on the other.

To achieve this, we were decided to use a threshold value for the algorithm work.

As long as there are over 10 files for the Parser to process, it takes precedence.

Once there are less than 10 files, the algorithm will run.

Of course, during the algorithm running period, files continue to arrive, so when it is finished, there will be more than 10 files to process and the priority will go to the Parser again.

Files that the Parser finished processing are moved to the 'Parsed' folder and deleted from the list of files in the DB.

Similarly, we have developed a mechanism for damaged files – these are moved to an 'Error' folder and also deleted from the list of files in the DB.

The maintenance of an updated backup scheme was achieved by using triggers for CRUD operations.

For some of the table in the backup scheme we were asked to keep the entire history of data (in other words not to update or delete, but only insert data).

Since these tables had 'Primary Keys", there was concern that some insert queries will fail due to Primary Key Violation. The simple and obvious solution was to cancel the 'Primary Key' of these tables.

הוסף תגובה

0 תגובות

אנא

היכנס למערכת

כדי להגיב

פרסום פרויקט

פרסום פרויקט

התחבר עם פייסבוק

התחבר עם פייסבוק

התחבר עם LinkedIn

התחבר עם LinkedIn

3 צפיות

3 צפיות 0 שבחים

0 שבחים הוסף תגובה

0 תגובות

הוסף תגובה

0 תגובות